Nowadays, the success of small or large organizations depends in great part on the Internet. To communicate with and interact with their clients, these enterprises use the Internet. All of these tasks call for the use of online scraping tools, such as Python web scraping tools, to gain access to relevant data.

Due to the enormous rise in demand for technology, data has become an essential component of modern life. You will always need to scrape data on your company-related issues, regardless of the type of firm you run. However, unless you have a Python web scraping tool, scraping data is not a simple task.

Do you want to learn more about web scraping and the technologies that are used for it? Look nowhere else. You can find everything in this article.

Contents of Post

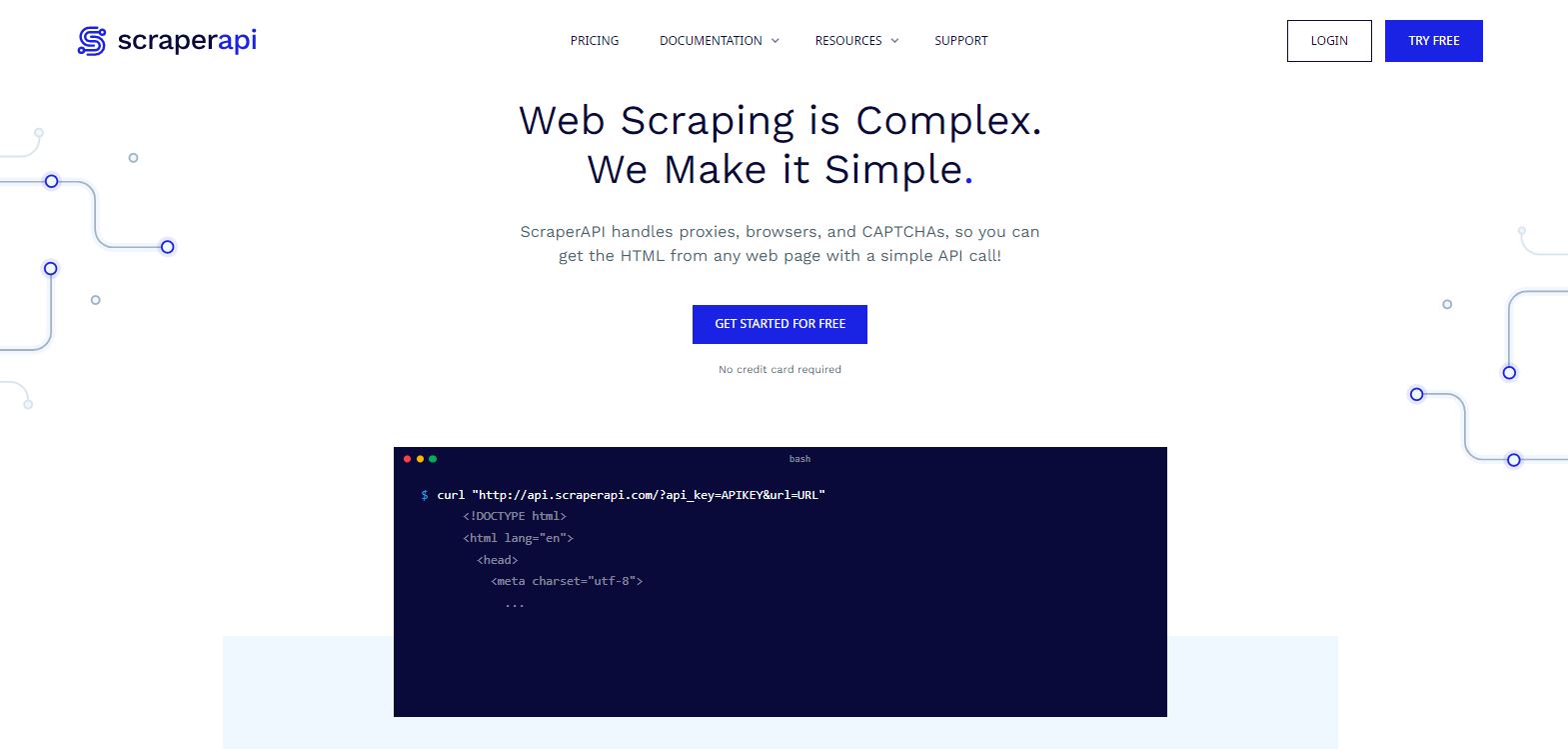

1. ScraperAPI

A tool for creating web scrapers is ScraperAPI. With a single API request, the scraper API manages proxies, browsers, and captcha to obtain raw HTML from any website. It automatically throttles queries to evade IP blocks and captchas while managing its own internal pool of thousands of proxies from various proxy providers.

It is the best online scraping service available, with unique proxy pools for e-commerce pricing scraping, search engine scraping, social media scraping, sneaker scraping, ticket scraping, and more.

2. ScrapingBee

With the help of the web scraping API ScrapingBee, you may browse the Internet without being stopped. We provide both standard (data center) and premium (residential) proxies so that you won’t ever again be barred from online scraping.

Additionally, we provide you with the option to view all pages in a real browser (Chrome), enabling us to support websites that significantly rely on JavaScript.

For developers and IT firms who wish to manage the scraping process directly without having to worry about proxies and headless browsers, there is ScrapingBee.

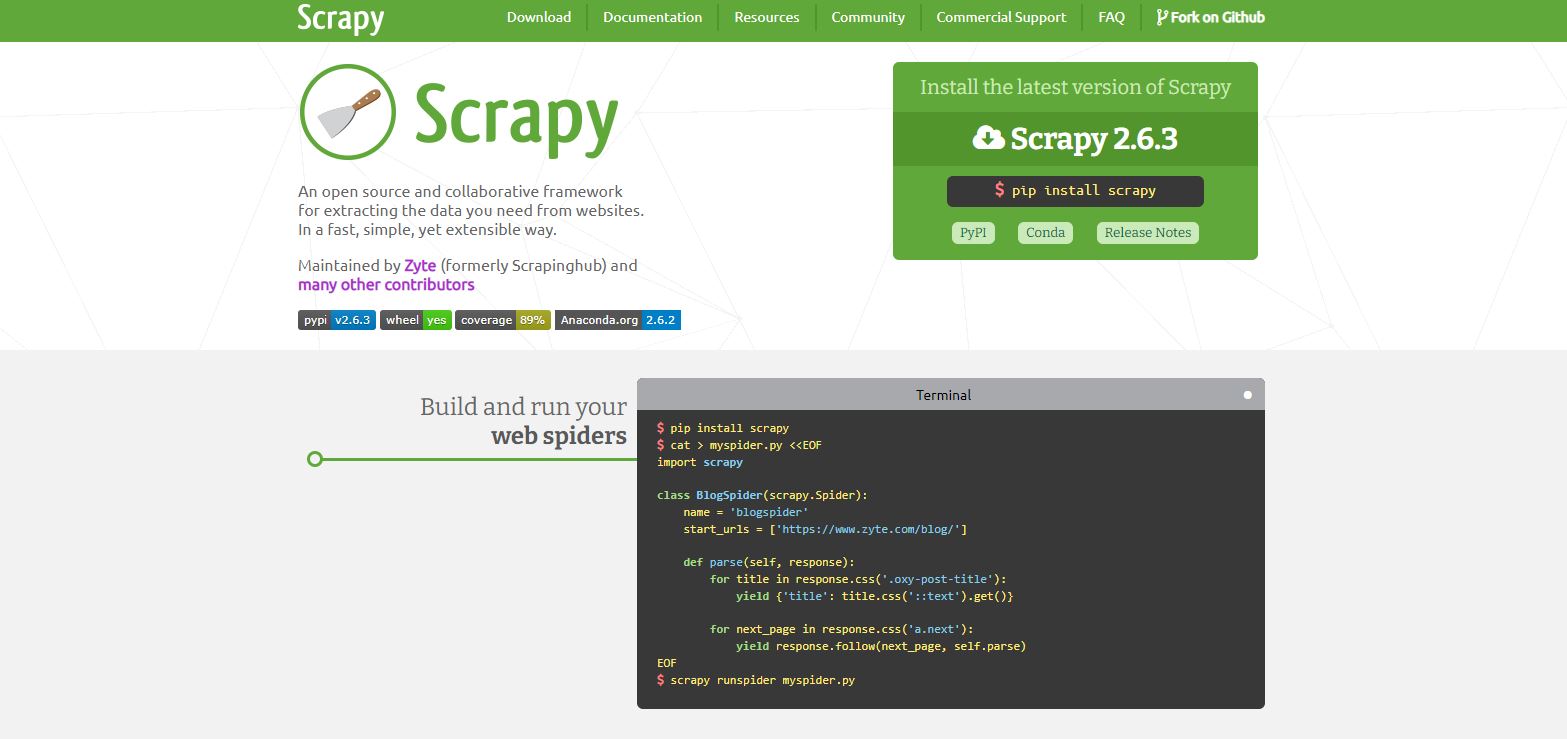

3. Scrapy

For Python developers, Scrapy is a web crawling and web scraping framework. Because Scrapy is a complete framework, it includes all the tools needed for web scraping, such as a module for performing HTTP queries and extracting data from the downloaded HTML page.

It is free to use and open-source. Data can also be saved by scraping. However, Scrapy is unable to display JavaScript and must thus use another library. For that, you may utilize Splash or the well-known Selenium browser automation tool.

4. Dexi.io

Dexi.io (formerly cloudscape) gathers information from a website without the need for downloads like other services. It allows users to set up crawlers and retrieve data in real-time using a browser-based editor.

The retrieved data may be exported as CSV or JSON or kept on cloud services like Google Drive and Box.net. Data access is supported by Dexo.io by providing a number of proxy servers and identity concealment. Data is kept on Dexo.io’s servers for two weeks before being archived.

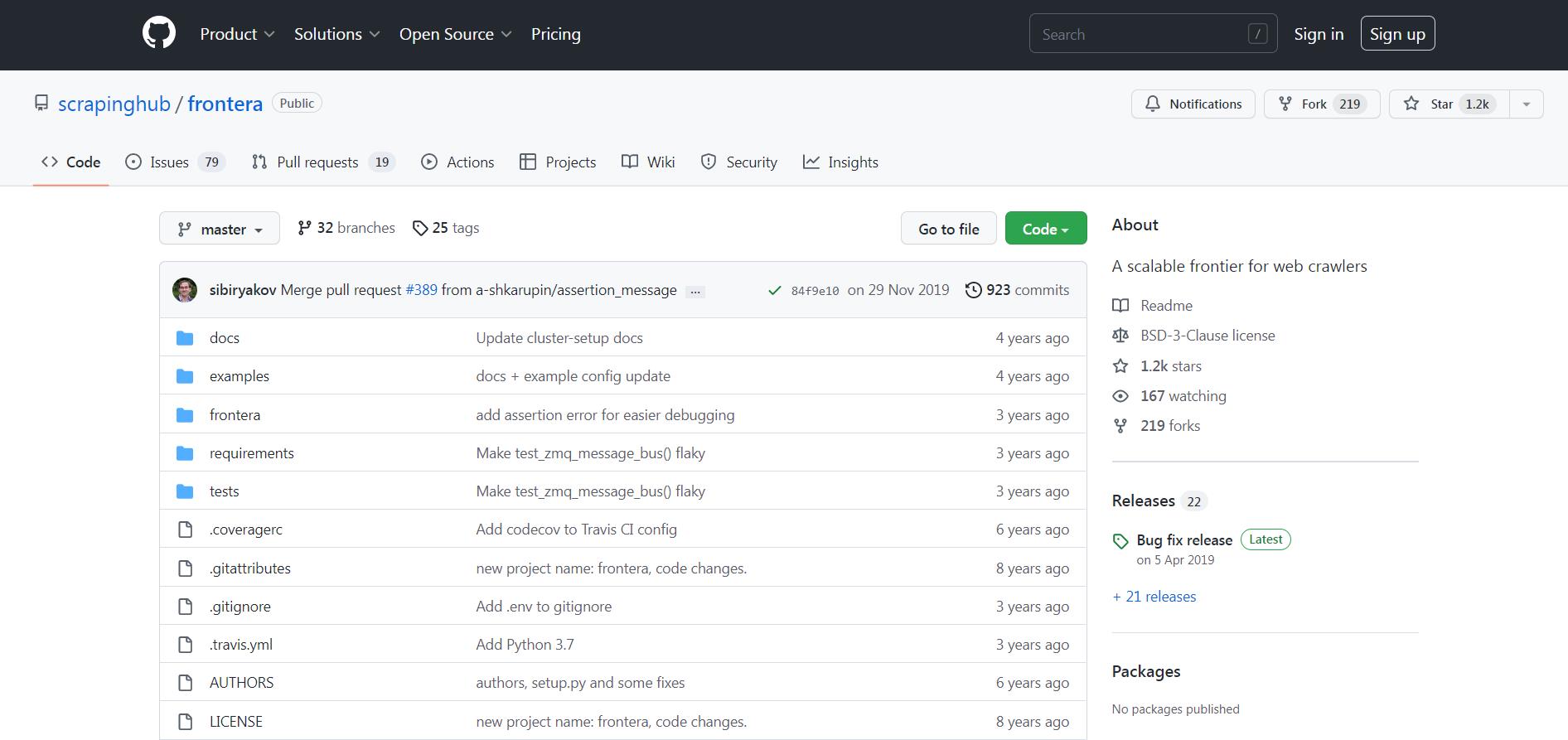

5. Frontera

Another web crawling tool is Frontera. It is an open-source framework designed to make the crawl frontier construction process easier. In increasingly advanced crawling systems, a crawl frontier is a system in charge of the logic and policies to follow while crawling websites.

It establishes guidelines for the order in which pages should be crawled, their priority during visits, how frequently they should be revisited, and any other behavior you might wish to incorporate into the crawl.

It is compatible with Scrapy as well as every other web crawling framework. For programmers and IT businesses that use Python, Frontera is fantastic.

6 Apify

Any website may have an API created for it and structured data extracted using the Apify web scraping and automation platform. For websites with robust anti-scraping measures, Apify adds its own clever proxy service to increase the reliability of scraping.

Apify offers hundreds of ready-made scrapers for well-known websites, but it’s also simple and quick-to-buy affordable bespoke solutions. Anyone without coding knowledge can set up, schedule, and run any Apify scraper, but the platform is also robust enough to be ideal for seasoned developers.

7. ParseHub

The crawling of one or more web pages is possible with ParseHub. In addition, ParseHub supports sessions, cookies, AJAX, JavaScript, and redirects. ParseHub recognizes complex texts on the web using machine learning technology and creates output files with the necessary data formats.

Based on client requests, ParseHub works to extract data from websites and store it locally. Users of Parsehub may start using a nice user interface with extremely high-quality user assistance without even having a basic understanding of programming.

Users may scrape data using Parsehub to extract things like email addresses, disparate data, IP addresses, images, phone numbers, pricing, and web data. A desktop version of ParseHub is also accessible for Windows, Mac OS X, and Linux.

8. Octoparse

Another web scraping tool with a desktop application is Octoparse (Windows only, sorry macOS users). It resembles Parsehub a lot. Although the price is less than Parsehub, we found the tool to be more challenging to use.

You may do both local extraction and cloud extraction (using the ParseHub cloud) (on your own computer). Pro: Affordable prices. Negative: High learning curve only for Windows

9. ZenRows

ZenRows is one of the most popular web scraping APIs because it solves the biggest challenge in data extraction from the web: getting blocked. Looking for an easy solution? There you have it.

You can rely on ZenRows to scrape webpages with premium proxies, rendering JavaScript, running a headless browser, and more. Rather than reinventing the wheel and spending a high budget, you can perform crawling with API calls.

The tool works with Python and adapts to any business size, from small to enterprise. Start with 1,000 free credits and try it yourself.

10. Sitechecker

Sitechecker’s Link Extractor tool offers a versatile solution for web scraping tasks. With its user-friendly interface and efficient link extraction algorithm, users can easily extract links from web pages without the need for complex coding or manual effort. This tool enables users to scrape internal and external links, analyze link attributes such as anchor text and rel attributes, and export the extracted data in various formats for further analysis.

Whether you need to gather backlink data, extract links for content analysis, or perform competitor research, the Link Extractor tool provides a reliable and efficient solution to meet your web scraping needs.

Wrapping Up

Additionally, given the variety of tools at your disposal, you have a reasonable number of options in case some do not suit your use case. Since web scrapers can help you extract data from online sites, there is no longer any excuse for you to avoid using them to develop insights.